Reliability is one of the major ongoing goals of data center operators. Several circumstances have to be in place, and with the rapid technological advancements, these have to be constantly updated as well. For achieving reliability, first of all, you need a solid foundation of data center redundancy.

Every organization depends on seamless access to data and applications; however, no single system is immune to failure. Redundant design across power, cooling, and network systems is crucial for a facility to remain operational even when critical components have to undergo maintenance or experience an unexpected outage or malfunction.

Because redundancy can be implemented in many ways, the industry relies on standard frameworks such as N-levels and Uptime Institute’s tier classification to describe design expectations and data center performance. These classifications serve the scope of revealing how a facility manages faults, maintenance, and recovery.

Behind tier classifications and ratings, there’s a whole set of engineering decisions taking cost, complexity, and risk into consideration. Our complete guide to redundancy and tier certifications examines those decisions in detail, explaining how data center redundancy shapes real uptime and what really distinguishes one level of resilience from another. Let’s dig in.

What is Data Center Redundancy?

If we want to define the essence of data center redundancy in a nutshell, it goes like this: the design and implementation of backup systems that prevent downtime and operational disruption. Data center redundancy involves creating alternative pathways and reserve capacity so that critical functions can continue even if a primary component happens to fail.

Some of the most illustrative examples are power, cooling, and networking. We can’t talk about power redundancy without backup generators and uninterruptible power supply (UPS) units that keep electricity flowing if a grid outage hits. The same rule applies to cooling and network systems, as well. Redundancy presupposes the existence of backup chillers, switches, and even fiber routes to maintain stability and connectivity in situations where the primary infrastructure fails due to unexpected events.

However, data center redundancy focuses on the facility’s infrastructure rather than the IT workloads it hosts. It does not include server-level backups or mirrored systems managed by IT teams. Instead, the objective is to protect essential operations (power, cooling, and network availability) so that housed servers and applications remain functional even during unexpected hardware or utility failures.

Power System Redundancy

Reliability and uptime depend on power, which makes power system redundancy the cornerstone of data center redundancy.

A redundant electrical design ensures that operations remain uninterrupted during faults or scheduled maintenance. Most modern facilities rely on dual utility feeds from independent substations, complemented by diesel generators that start automatically during grid outages. Between these systems, uninterruptible power supply (UPS) banks maintain voltage stability and prevent service disruption.

In high-availability environments, power distribution is divided into dual, independent paths – commonly labeled A and B – each capable of carrying the full operational load. This configuration allows maintenance or replacement of switchgear components without having to deal with downtime. Additionally, static transfer switches balance current flow between feeds and instantly redirect power when irregularities occur.

For further strengthening data center redundancy, battery redundancy is implemented with UPS strings arranged in N+1 or 2N layouts for providing both isolation and spare capacity. Data center operators conduct regular and thorough load testing to confirm the readiness of the system and to make sure that it’s running at peak performance. Facilities built on 2N power architectures usually achieve the industry’s highest redundancy ratings. These are directly and measurably supporting stronger uptime metrics and greater operational confidence.

Cooling System Redundancy

Cooling is another vital part of data center redundancy. Efficient cooling is the guarantee that the loss of a single chiller or CRAH unit doesn’t compromise performance. Most facilities are designed with an N+1 configuration, keeping one extra unit available to handle situations where unexpected failures occur or scheduled maintenance is due. This arrangement stabilizes airflow and temperature even during high-demand conditions.

In advanced environments, this reliability is further improved by dividing systems into closed or segmented cooling loops. This method allows technicians to isolate faults so they can replace pumps, compressors, or other broken parts without interrupting data hall operations. Continuous monitoring across hot and cold aisles provides early warnings of temperature fluctuations, which allows for quick and precise adjustments.

In high-density deployments, 2N chilled-water pumps or dual refrigerant circuits maintain thermal equilibrium even if one system goes offline. The same redundancy principle extends to cooling towers and heat-rejection infrastructure. Consistent thermal capacity not only prevents cascading faults but also protects power systems and critical hardware. Investing in this approach reinforces the layered reliability that defines data center redundancy.

Network Redundancy

The next critical component of data center redundancy is network redundancy. Its role cannot be stated enough: network redundancy is what maintains external connectivity when carriers or internal network devices fail.

Reliable facilities connect to at least two independent network providers, each entering the building through separate conduits and terminating in distinct meet-me rooms. This physical separation minimizes the risk of simultaneous disruptions. Redundant routers, switches, and firewalls add further resilience. Well-designed routing protocols enhance the benefits by automatically redirecting traffic along alternate paths to sustain continuous communication.

Across multi-building or regional campuses, fiber rings and geographically diverse routes extend network resilience beyond a single facility. Because connectivity failures are the most visible to end users, network redundancy directly influences uptime and customer experience. As a result, it stands as one of the most important elements of data center redundancy: it is safeguarding both operational continuity and the credibility of the services the facility delivers.

How to Determine Data Center Redundancy

Evaluating data center redundancy means understanding both the quantity of backup systems and how those systems interact with each other under real conditions.

There are two frameworks providers use to describe data center redundancy: data center tiers and N-levels. Both offer a perspective on fault tolerance and maintenance flexibility.

Tier classifications were developed by the Uptime Institute, and they represent a system designed to define a facility’s ability to maintain operations during maintenance or unplanned failure.

N-levels, on the other hand, quantify redundancy by comparing required capacity (N) with installed capacity (N+1, 2N, or 2N+1).

However, these designations alone cannot convey true reliability. A data center can advertise 2N power redundancy and operate with N+1 cooling or a single network path. A proper assessment examines each subsystem (power, cooling, connectivity) according to its own redundancy rating and testing record.

Comprehensive evaluations include failover testing reports, generator maintenance logs, and thermal performance data. Operators that want to offer transparency to their customers typically track and publish these details to demonstrate operational reliability instead of relying on often fluffed-up marketing claims.

What Are Data Center Tiers?

Data center tiers provide a globally recognized method for ranking facilities according to their uptime and redundancy capabilities. Developed by the Uptime Institute, the four-tier system ranges from Tier I to Tier IV and evaluates how infrastructure handles maintenance, fault tolerance, and service continuity.

Tier certification is optional, but most leading facilities pursue it to validate their reliability and attract enterprise clients. Certification involves a rigorous process that includes document review, on-site inspection, and confirmation that every system meets the claimed redundancy levels.

Tiers serve as a valuable benchmark for comparing facilities, but sustained uptime depends just as much on daily operations, like how equipment is tested, maintained, and documented, as it does on physical design.

What Are N Levels?

N-levels measure redundancy by quantifying spare capacity beyond the baseline required for normal operations. They describe how resilient a facility is to failures:

- N: No redundancy; a single failure disrupts operation.

- N+1: One spare component beyond what’s required to support the load.

- N+2: Two spares, adding additional protection.

- 2N: Two independent systems, each capable of supporting 100% of operations.

- 2N+1: Two full systems plus one spare component for simultaneous maintenance and failure coverage.

These redundancy levels can apply to power, cooling, and network systems. Facilities frequently combine different N-levels across subsystems to balance cost with uptime goals. Understanding N-levels and these relationships helps businesses choose data center providers whose redundancy goals align with their own operational risk and reliability targets.

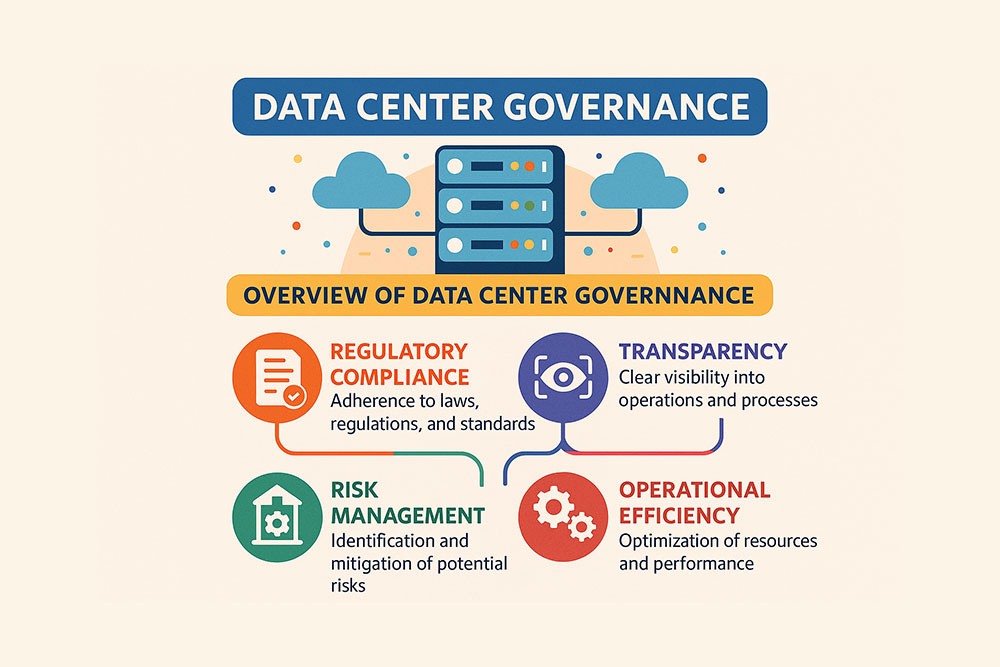

Factors Considered for Assigning Tiers

When the Uptime Institute evaluates a facility, it examines both design and operational criteria that influence redundancy and reliability. The primary factors include:

- Proven uptime performance and service availability.

- Infrastructure design and redundancy ratings for power, cooling, and network systems.

- Staff training, maintenance procedures, and concurrent maintainability practices.

- Security, carrier neutrality, and geographic risk exposure.

These evaluations focus on topology and operational sustainability instead of prescriptive technology requirements. The approach makes it possible to focus on flexibility for innovation, and, at the same time, maintain consistent global standards. This approach ensures that data center redundancy is measured on functional reliability rather than on specific equipment choices.

Understanding Tiers

The Uptime Institute’s tier classification system remains the global benchmark for measuring reliability and data center redundancy. Tiers represent the specific levels of infrastructure resilience, fault tolerance, and operational continuity.

Tiers extend from I to IV, and the progression reflects an increase in the standards for system duplication, maintenance flexibility, and uptime guarantees.

Reading what each tier represents helps organizations align their data center strategy with their workload needs, but with their regulatory obligations and risk tolerance expectations as well.

Tier 1

A Tier 1 data center represents the most basic level of availability. It is designed with a single distribution path for both power and cooling and contains no redundant components. This structure delivers approximately 99.671% uptime annually, equating to about 28.8 hours of potential downtime per year. Because every critical system is singular, any component failure or scheduled maintenance requires a complete shutdown.

Tier 1 facilities are cost-effective and straightforward to operate, which makes them suitable for small businesses, startups, and organizations hosting non-critical workloads. Tier1 facilities support office applications, all sorts of backups, or server testing, where occasional interruptions don’t have, or have very little operational impact. On the flip side, while they may meet basic hosting needs, Tier 1 facilities lack the resiliency expected for enterprise production systems or real-time services.

Tier 2

A Tier 2 facility enhances resilience through limited data center redundancy. These facilities maintain a single power and cooling path, but include additional components, such as extra generators, UPS modules, or cooling units, that provide partial failover capability. This setup reduces the frequency of unplanned outages and increases expected uptime to around 99.741%, or roughly 22 hours of downtime per year.

Tier 2 data centers still require downtime during certain maintenance operations, but the added redundancy makes it possible for systems to stay online for longer and also to recover faster from minor faults. This balance of cost and reliability makes Tier 2 suitable for small to mid-sized organizations that need higher service availability but don’t require full concurrent maintainability. Typical users include SMBs hosting secondary databases, disaster recovery workloads, and other, less time-sensitive applications.

Tier 3

Tier 3 data centers are designed for uninterrupted operations. These features include multiple power and cooling paths, as well as N+1 redundancy across all critical infrastructure components. The hallmark of Tier 3 is concurrent maintainability: technicians have the possibility to perform maintenance or replace equipment without taking things offline.

This level of data center redundancy provides uptime of approximately 99.982%, allowing for no more than about 1.6 hours of downtime per year. Because of this, tier 3 facilities are considered the standard for enterprise environments requiring constant availability. E-commerce platforms, SaaS providers, or large corporations managing customer-facing services need the reliability of Tier 3 facilities. Although they require greater investment in everything from design, energy distribution, and operational processes, Tier 3 environments offer a strong and resilient option, making them the most common choice for enterprise colocation clients.

Tier 4

At the highest level of redundancy lies the Tier 4 data center, which represents full fault tolerance. Every critical subsystem is built in a 2N or 2N+1 configuration, meaning two entirely independent systems (and, in some cases, an additional spare component) operate in parallel. Physical separation between power paths and cooling loops prevents cascading failures.

A Tier 4 data center guarantees around 99.995% uptime per year, or roughly 26 minutes of maximum possible downtime.

Tier 4 facilities are engineered for mission-critical workloads: government systems, financial trading platforms, healthcare networks, etc., where even seconds of interruption can have serious consequences. Because Tier 4 environments are both very complex and expensive to maintain, they are typically reserved for organizations whose business continuity depends on absolute, 100% reliability.

Why Measuring Data Center Redundancy Is Tricky

N-levels and data center tiers provide structure, but they cannot fully capture the realities of operational reliability. Two facilities may share the same redundancy ratings and perform very differently because of maintenance quality, how often they are tested, and also the age of key components, which can vary.

N+1 redundancy performs well in smaller systems but adds limited benefit in large UPS arrays, where a single spare has little impact. Similarly, tier classifications don’t define aspects like switchover timing, manual intervention procedures, or operator readiness for that matter – however, these are all essential measures of actual uptime.

External risks further complicate redundancy assessment. Regional power instability, floods, or fiber disruptions can disable even the most redundant infrastructure. Geographic diversity and interconnection planning are therefore as important as internal design.

Understanding data center redundancy means looking beyond numerical ratings. Proven reliability comes from documented testing, audited results, and operational transparency.

How to Accurately Assess Redundancy

A meaningful assessment of data center redundancy involves both technical verification and operational review.

Begin by mapping the power, cooling, and network infrastructure to identify every backup system. Then, confirm that each is tested under real load and that switchover procedures are clearly defined.

Eventually, take a data center tour, and ask for detailed information about how backup systems activate, how long transitions take, and whether they occur automatically or manually. Historical uptime reports and incident logs reveal how the facility performs under pressure and whether redundancy works as designed.

The evaluation should also consider location and interconnection factors. Redundancy should extend beyond the facility through diverse fiber paths, independent utility sources, and regional site pairings. Some organizations complement physical redundancy with cloud-based replicas or disaster recovery solutions to maintain operations during catastrophic events.

Operational discipline completes the equation. Preventive maintenance, change management, and how prepared the data center staff is, influence uptime just as much as physical design.

Conclusion

Data center redundancy defines how well a facility withstands component failures and continues to operate under stress. Tier classifications and N-level ratings provide a common framework for comparing facilities, but they become meaningful only when supported by rigorous testing and disciplined maintenance carried out by the data center staff.

Selecting the right redundancy level is a strategic decision that depends on each organization’s risk appetite, service expectations, and financial planning. But in the end, redundancy should be viewed as an evolving discipline of design and continuous verification. When managed effectively, it creates an unshakeable operational foundation that safeguards uptime, strengthens reliability, and supports growth in the long term.

Contact us at Volico Data Centers to learn about the redundancy and reliability of our data center facilities.