Ceph storage is a revolutionary storage solution, and we’ll explore it in greater detail in this post, what you should know about ceph storage and its most important features.

For starters, Ceph storage is an open platform whose design enables file, block, and object storage on a single system.

Ceph storage is self-healing and self-managed, meaning it can independently handle planned or unplanned outages.

Further, the solution seeks to enable downright distributed operations with no single point of failure.

A Brief Ceph Storage History

Ceph is the brainchild of Sage A. Weil, a computer scientist who convinced the idea in 2006.

Weil propagated his discovery through his company Intank Storage. The company was acquired by Red Hat in 2014, with Weil being the chief architect responsible for software development.

Why is Ceph Storage Unique?

Ceph runs on storage nodes, a collection of commodity services with many hard drives and flash storage.

Yet, Ceph’s claim to fame is the CRUSH algorithm.

The algo enables storage clients to determine the node to contact to store or retrieve data without human intervention.

Why is this unique?

… because the algorithm eliminates the need for a centralized registry to track data location on the cluster/ metadata.

Some downsides of having a centralized registry include:

- It is a performance bottleneck that hinders scalability

- It creates a single point of failure

By stamping out a centralized data retrieval and storage registry, Ceph allows organizations to scale their storage capacity and performance without compromising availability.

At the heart of the CRUSH algorithm is the CRUSH map.

The map carries the info about the storage nodes in the cluster. It is also the core element for calculations the storage client needs to enable it to determine which storage node to contact.

The CRUSH map is distributed from a special monitor node server across the cluster. You only need three monitor nodes for the entire cluster, no matter the size of your Ceph storage.

Side Note: The storage clients and nodes are responsible for contacting the three monitor nodes.

When you look at this formation, indeed, Ceph has a centralized registry. However, it serves a different purpose than the traditional storage solution registry.

It tracks the cluster’s state, a task significantly easier to scale than operating a data storage or retrieval registry.

Remember, the Ceph monitor node doesn’t process or store metadata. It only monitors the CRUSH map for individual and client storage nodes.

In addition, data flows straight from the client node toward the storage node and the other way around.

Ceph Storage Key Functionalities

Here are Ceph’s most notable features and functionalities:

Ceph Storage Scalability

As stated, Ceph’s storage client directly contacts the relevant storage node to retrieve and store data.

Besides, there are no intermediate modules other than for the network, which you can configure depending on your needs.

The lack of intermediaries and proxies allows Ceph to scale in performance and capacity.

Ceph Storage Redundancy

Durability is one of the biggest challenges of handling large data sets.

Ceph redundancy provides data durability through replication or erasure coding as follows:

Ceph Storage Replication

Ceph’s replication mimics RAID (redundant array of independent disks) but with some differences.

Ceph replicates data in several racks, nodes, or object storage daemons (OSDs)based on your cluster configuration. It splits the original data and replicas into small bits and evenly spreads them across your cluster via the CRUSH algo.

If you have set three replicas on a six-node cluster, the three replicas will be distributed to all six nodes.

It is, therefore, crucial to set the right data replication as follows:

- Single-node Cluster: Set data replication across the OSDs if the node is available.

Note; you cannot replicate the data on the node level since you’ll lose the information if there is a single OSD failure.

- Multiple Node Cluster: Your replication factor determines the number of nodes or OSDs you’re ready to lose if your node or disk fails without data loss.

Note; Data replications mean you have to reduce the space available in your cluster.

For instance, a replication factor three on the node lever implies you’ll only have 1/3 of the cluster space available.

Ceph data replication is super-fast and can only be compromised by your OSDs read/write operations.

Erasure Coding

Erasure coding involves encoding original data. That way, you only require a data subset to recreate the original data when retrieving the information.

It breaks down objects into k data fragments and computes them into m parity fragments.

For example, if your data’s value is 62, you can split it into:

- X = 6

- Y = 2

Erasure encoding will compute several parity fragments as follows

- X + y = 8

- X – y = 4

- 2x + y = 16

In the equations above, k =2 and m = 4. K represents data fragments while is the parity fragments.

You’ll only need two of the six stored elements if your node or disk fails and you need to recover the data. Erasure encoding ensures data durability.

Why is this crucial? … because parity fragments occupy less space compared to data replication.

You can think of erasure encoding as a way to help you optimize data space optimization.

The process isn’t without its downsides, though. The cluster requires more time to read and write parity fragments, making erasure encoding slower than replication.

That said, erasure coding works best for clusters handling massive amounts of cold data.

Failure Domains

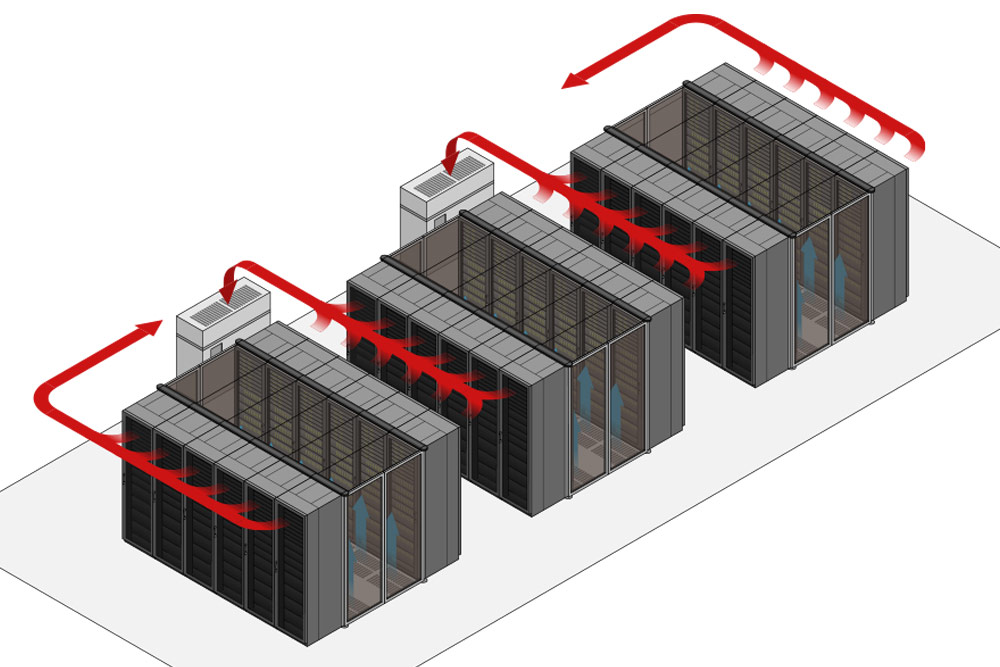

Ceph is data center ready.

The CRUSH map can represent your organization’s physical data center infrastructure, from rooms to racks, flows, and rows.

In addition, it allows you to customize your data center’s topology. That way, you can create distinct data policies that Ceph uses to ensure the cluster can handle failures across specific parameters.

Topology allows you to lose an entire rack or a row of racks, and your cluster could still be operational, albeit with a compromised capacity and performance.

While that level of redundancy may cost you much storage space, you may only want to implement it for some of your data.

Even though that’s a problem, you can create multiple pools with independent protection.

Ceph Storage Pros and Cons

Ceph has its upsides and downsides as follows:

Pros

- High performance with low latency and no downtime

- Ability to grow with your data, enabling scalability

- No limiting licenses

- Automatic storage cluster management and regulation, keeping your data safe.

- Because Ceph is an open-source platform, it encourages innovation through collaboration.

Cons

- You need a comprehensive network to enjoy Ceph’s functionalities

- Set-up is relatively time-consuming

Ready to Get Started with Ceph Open-Source Storage?

Ceph and Ceph storage clusters bring the high scalability your organization needs to grow in a cloud environment.

From start-ups to multinational corporations, SMEs, healthcare facilities, and academic institutions, Ceph is the future of data management, storage, and decision-making across all enterprises.

Call us at 888 865 4261 or Chat with one of our Ceph experts if you’d like to see Ceph in action or learn how this revolutionary storage solution can work for your organization or industry.