The rise of Artificial Intelligence has triggered a significant wave of transformation in the data center world lately. The relationship between data centers and AI adoption has proven to be a complex one, rippling out to many areas of our lives. The powerful potential of Artificial Intelligence is very promising, however, changes need to take place in data centers for AI adoption, significantly transforming traditional sites to meet the evolving computational demands of this new technology.

Leveraging this potential comes with some risks for data centers, and the importance of meticulous infrastructure design is becoming more important than ever. New power and cooling strategies are in the making, and new networking technologies are emerging to foster the growth of future AI applications and data centers for AI.

It’s no exaggeration to say that, step by step, AI is reshaping data centers. This blog explores the challenges and intricacies of this transformation and looks at the latest tendencies and approaches in the data center world.

Data centers for AI: changes in the infrastructure

AI data center requirements are radically different, as AI requires significantly more power and bandwidth than traditional computing. This dramatic change is putting a strain on data center infrastructures all over the world. As a result, we are seeing major transformations in data centers for AI adoption.

Traditional data centers with regular computing tasks have been relying on CPU-powered racks for ages. Now, with the sudden appearance of AI, data centers need to adapt to accommodate it by switching to GPU-powered racks. Moreover, AI runs on multiple GPUs simultaneously, sharing and syncing the information; and the number of those GPUs keeps rising.

The story doesn’t end with replacing hardware, though. Data centers for AI workloads need more power, but their higher use of power calls for better cooling and more efficiently organized physical space within the data center. So, in order to accommodate AI, data center architectures need updates – and these can be an expensive investment.

A difficult choice

Many data center providers find themselves in a difficult position. Upgrading data centers for AI infrastructure could mean there’s no going back to traditional CPU workloads. Overcommitting at this stage can be a hazardous endeavor, and the risk is high because the technology is still in the newborn stage. Predictions can be tricky because of the enthusiasm for novelty, but ending up with underused data center capacity can lead to significant profit loss and high maintenance costs.

Many new data center developments decided to halt projects until the picture became clearer. The market is still in the waiting phase, trying to figure out the next best strategic step in a situation where every step counts and every step is irrevocable.

Upgrading data centers for AI comes with demand for power and bandwidth

The most pressing challenges of data centers committing to AI are, of course, the outrageous power demand, cooling, and extreme bandwidth needs. Running AI applications like Nvidia’s DGX platform, for example, requires a lot of power and bandwidth. DGX models like A100, H100, and GH200 (presented in May 2023) consume 6.5-11kW per 6U unit. As for bandwidth demand, GPUs need up to 8x100Gb/s for EDR – or, in the case of HDR connections, 200GB/s. Each GPU has eight connections, adding up to 8c200G/unit.

The industry is looking ahead to a period of transition in the following years, where the data center as we know it today will transform to meet new computing needs.

Adaptation solutions in data centers for AI workloads

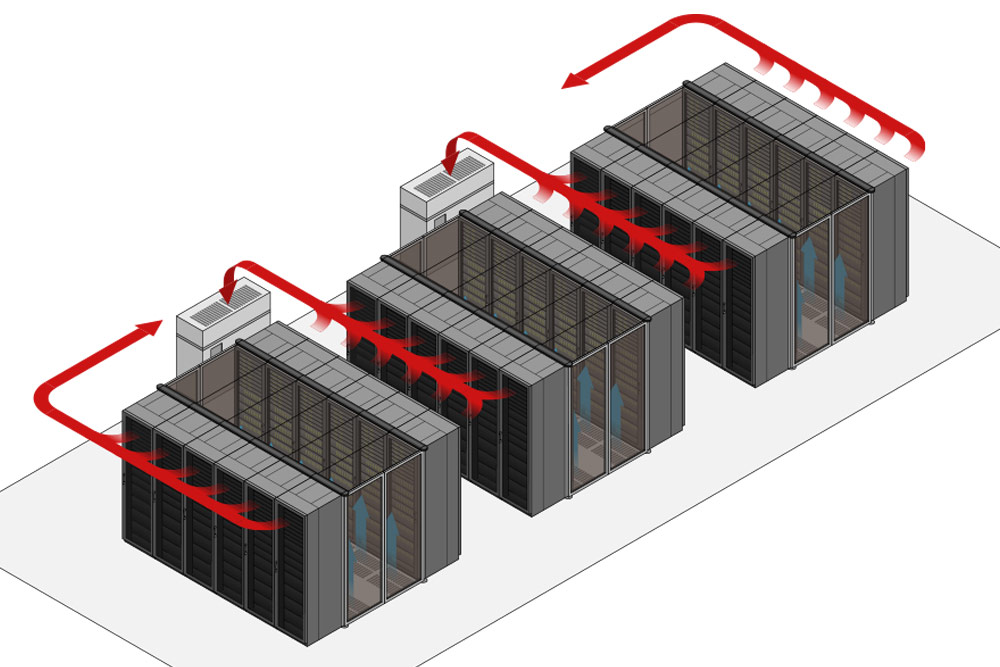

As network demands surge, accommodating increased cooling and power requirements calls for new approaches and solutions. Network managers are reassessing their designs, and often adjustments to network blueprints and spacing out GPU cabinets follow. In many cases, we can see a shift toward End of Row (EOR) placement configurations for better cooling administration.

The broader physical distance between switches and GPUs necessitates adjustments to network layouts, usually solved by the integration of more fiber cabling to complement the switch-to-switch connections. As an alternative to direct attach cables (DACs), which are limited to 3-5m for these speeds, active optical cables (AOCs) are emerging as a viable option. AOCs have been proving advantageous for their extended reach, lower power consumption, and lower latency.

AI networking: InfiniBand or Ethernet?

There seems to be some kind of competition emerging between Infiniband and Ethernet use for supporting AI network infrastructures. Nvidia’s emerging InfiniBand technology is popular among today’s AI networks; however, the mature Ethernet technology continues to dominate most data centers globally. Consequently, in order for generative AI to truly grow and spread to public data centers and cloud infrastructures, the adoption of Ethernet network technology for data centers for AI has to gain more traction. Broadcom’s Jericho3AI Strata DNX chip is an emerging design that builds AI clusters with Ethernet.

Data center design and planning forward have never been so important

Planning plays a major role in the upcoming period, as data centers will look to minimize the chance of ending up in eventual unprofitable situations. However, new data center projects can’t stagnate forever, waiting for the market to offer certainty. The demand for data centers for AI is high, and providers need to eventually make a move in order to get ahead of the competition and secure their market share.

To mitigate the chances of expensive miscalculations, data center operators need to seek out the advice of experts in new technologies to make the best decisions on security, power, cooling, and cabling. The planning phase of new data centers will be more crucial than ever for avoiding errors and unexpected hindrances in the future.

Walking on the edge of the sword

The principles of data center infrastructure are changing in actual time due to the sudden need to accommodate AI. Operators of data centers for AI have to balance the risk of overcommitment with the risk of losing their competitive edge in the market. The choice is not easy, as the landscape is constantly changing. Still, data center providers can mitigate the risks by meticulously planning their sites not only to accommodate AI but all the future changes that can come with it as well.

Leveraging AI within the data center

Besides market competitiveness and potential profit, data centers for AI can highly benefit from on-site adoption of the technology. Today, many data center operators are researching how AI will impact the data center to leverage the benefits early on. AI can be a powerful tool for improving efficiency and security, but it can also be useful for optimizing workflows.

With access to real-time monitoring data, the technology can be used to map out inefficiencies in the data center. Based on the valuable insights, improvements can take place in a variety of areas, like security system operations, hardware monitoring and maintenance, power use monitoring, or measuring and adjusting temperature and humidity levels.

By using the data from access control systems, AI can be a valuable contributor to data center sustainability. Based on real-time data on occupancy, temperature, lighting, and HVAC systems can be adjusted to save energy and water. This way, consumption can be reduced in the whole data center building to focus the energy where it is most necessary.

However, upgrading data centers for AI use on-site can have many benefits beyond mere energy efficiency. AI can help optimize resource distribution based on the real-time demand of specific workloads. AI systems are capable of learning and adapting to changes. This dynamic approach can tremendously benefit data centers from improved response times and scalability to cost optimization.

Conclusion

The evolution of data centers for AI adoption is already a reality, with many providers responding to the surge in demand by going hybrid. The transition is resource-intensive for the data center world; however, most consider the powerful potential to be worthy of investment. As the new technologies evolve, the need for flexibility, scalability, and adaptability will continue to be paramount for success in an exciting and versatile industry.