The recent soar of large language models has created quite some excitement, poking a hole through reality as we know it. These models have reached a large number of people, enabling the use of generative AI for various purposes all over the world. And while a few science fiction writers have anticipated its appearance, there was no way of preparing for the paradigm shift generative AI has brought to the boil. The widespread availability of language models like ChatGPT has opened up unprecedented opportunities for growth and development. However, it has brought challenges of equal magnitude as well. We find ourselves having to redefine our notions and reevaluate our needs, with generative AI and data center requirements being one of the most burning topics today.

The incredible potential this powerful new technology has brought is hard to ignore. However, leveraging this potential comes with a lot of responsibility. A balance has to be achieved between the growth of generative AI and data center infrastructure needs, with the most imperative issues being power-related.

These changes pressure the industry to redefine basic notions like what a data center is and how it works. This blog will look into the symbiotic relationship between generative AI and data centers and offer a glimpse into how data center requirements are changing to accommodate the new world model.

Cost-efficiency is Not the Priority

Generative AI and data center needs are changing. These changes bring alterations to data center infrastructure and long-term strategic planning as well. Companies working with generative AI can no longer focus only on cost-efficient solutions when it comes to scaling up and moving workloads to a colocation provider. Going for the lowest cost per compute power and real estate might not be worth the compromise in the long term.

Leveraging AI requires a smarter positioning of infrastructures. AI learning models depend on varied workloads, meaning that a dispersed infrastructure is more efficient. Certain workloads like real-time healthcare data processing need to be managed in specific locations. As a consequence, positioning infrastructures in population-dense areas is important for acquiring excellent network access and interconnection possibilities.

Looking at generative AI and data center infrastructure relevance, there’s a pressing need to rethink the way we approach things in several areas. With the emphasis on this new technology growing at a fast rate, responsible, forward-thinking strategies will continue to stay crucial in the near future of the data center industry.

How Is Generative AI Changing Data Center Requirements?

The scale at which artificial intelligence keeps growing already has an impact on data centers, networks, and computing. Generative AI and data center infrastructure are inseparable from each other. And while the scale of growth for AI can’t be predicted with accuracy, data centers are burdened with providing the necessary space and hardware to accommodate future versions. Some of the areas already require significant changes.

More Compute Capacity

AI applications need significant amounts of computing power. Especially deep learning algorithms, which undergo training by analyzing and processing incredibly large amounts of data. To make this possible, great computational resources need to be employed. Hence, there is an increased demand for powerful hardware in data centers.

As large language models like GPT-4 expand, they create more and more use cases. At present, they have nearly 2 billion monthly users. This drives demand for improved traffic for real-time interactions and necessitates the development of more efficient solutions from data centers.

Power Demand and Scalability

In 2022, data centers consumed approximately 230-340 terawatt hours of electricity globally, which is about 1-1.3% of all electricity demand. Data transmission networks consumed 260-360 TWh, and cryptocurrency mining added another estimated 110 TWh, 0.4% of annual demand. This amount of energy is more than the yearly consumption of some countries. Also, it is expected to increase at an unforeseen pace to support the requirements for learning algorithms and other AI.

This expected growth poses the question of scalability for generative AI and data center capacity. Prioritizing flexibility, scalability, and resource efficiency will be a priority for future data center designs. Since the scale of growth is unpredictable, designs calculating with future adaptation and versatility will remain a first concern.

Advanced Networking and Connectivity Requirements

The need for bandwidth and capacity increases as AI models transform and become more complex. Generative AI and data center requirements are changing in synchrony. Therefore, data center providers are urged to adopt advanced networking solutions for better connectivity and seamless data transfer.

AI models, particularly learning algorithms, rely on vast data sets for input and training. This drives demand for high-speed and high-capacity networks. As a result, higher-capacity hardware (switches, fiber, routers, interconnects) becomes crucial for facilitating excellent data traffic.

Demand on the Edge

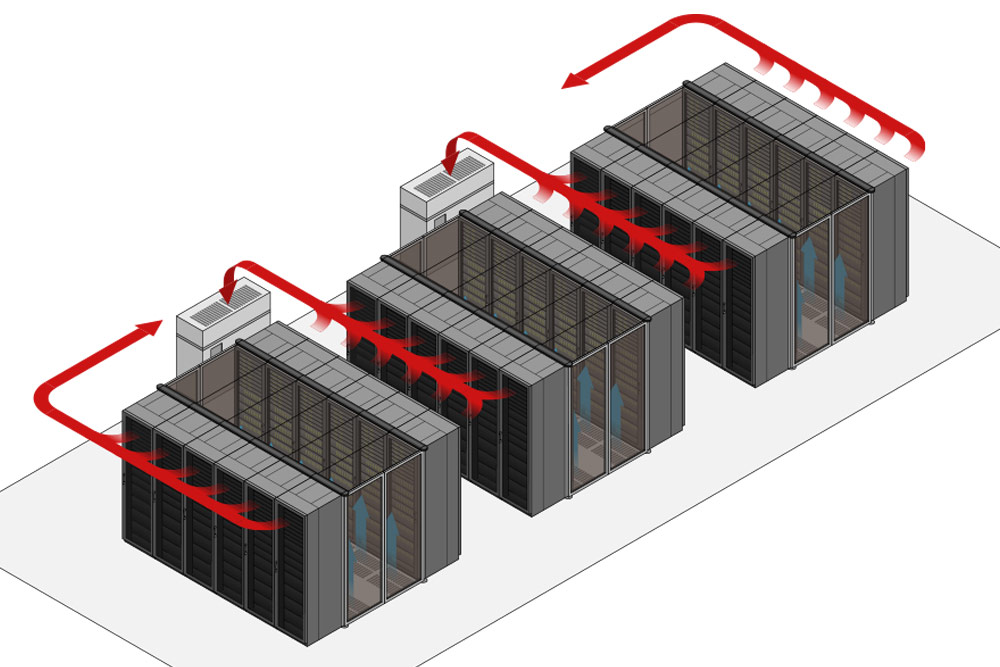

The growth of AI and the expansion of the IoT network requires low latency and real-time processing of unprecedented speed and quality. The importance of edge computing is undeniable: transferring workloads closer to the source of the data brings significant improvements by decreasing bandwidth traffic and reducing latency.

The impact of generative AI on data center requirements manifests in embracing innovative data center designs. Distributed AI architectures for the development of edge devices and networks are one of the most important new features. By improving data flow and increasing the efficiency and speed of operations, edge computing supports performance. As a plus, it brings remarkable changes in user experience.

Generative AI and Data Center Improved Security Requirements

The prevalence of AI in many areas of use increases the need to improve security measures both in data centers and at the edge. AI used for cybercrime purposes poses a threat to security. The appearance of deepfakes as a cybersecurity concern is real and ingenious. Robust measures need to be taken to control future attacks. Security threats evolve at the pace of new technology, so we always need to be one step ahead of them with security measures.

The good news is AI can be trained to detect security threats. It can pinpoint potential risks and recognize anomalous behavior by analyzing network traffic in real-time. However, cybercriminals can also use AI to get access to a system. Hence, data centers must be prepared to ward off these threats by improving security at the network infrastructure level and deploying diverse other security mechanisms.

Generative AI and Data Center Changes Call For Energy-Efficient and Sustainable Solutions

Energy efficiency is a real concern for the future of data centers. While there are many cases of powering data centers with renewable energy, the truth is that it’s challenging to power a data center with merely solar panels. The impact of generative AI on data center requirements manifests in an increased demand for power consumption. As mentioned previously, this is due to the fact that LLMs and generative AI learning model training require power-intensive data transfer and excellent network traffic to operate.

If we look at the numbers, Open AI’s GPT-2, with its 1.5 billion parameters, needed 28,000 KWh of power for its training. GPT-3, with its 175 billion parameters, needed 284,000 KWh. This is already a 10 times increase compared to the previous model. The impact of generative AI on data center infrastructures creates an unprecedented demand for power-efficient solutions.

While there are differences between those powered by renewable energy and those powered by fossil fuels, the carbon footprint of data centers is still immense. As the world continues to adopt generative AI and data centers are doing everything to accommodate it, power usage effectiveness becomes paramount.

If scaling up continues at this pace, power demand and carbon footprints will grow proportionately. Renewable energy sources might not be enough to support future data center needs, and a more comprehensive power planning will likely become necessary. There are rumors about some companies already adopting nuclear technology to power their data centers.

Conclusion

The use of generative AI and data center requirements continue to change in tandem with the worldwide adoption of the technology. Communications, cloud computing, edge computing, network, and data management infrastructures are already impacted. Essentially, all data center technologies will be experiencing changes in the near future in order to keep up with the fast-paced evolution of this paradigm-shifting technology.

Got questions? Want to talk specifics? That’s what we’re here for.

Interested in Generative AI and data center technology changes in the forthcoming years? Discover how Volico Data Centers Colocation facilities and services can help you with flexible and efficient edge infrastructures, state-of-the-art hardware, and supreme connectivity solutions to meet your forward-thinking plans.

• Call: (305) 735-8098

• Chat with a member of our team to discuss which solution best fits your needs.